Get Started with LangChain--No OpenAI😉!!

LangChain with HuggingFace and Streamlit

Hey there, readers! 👋 Welcome to my blog. I bet you've been hearing a lot about AI, LLMs, and GPT models lately. Are you curious to dip your toes into the world of coding and get a feel for what all the buzz is about? You are at the correct place dear!!🎉

First things first, let's break down some basic terms at level zero. 🤓

What is LLM?🧠

Large Language Models (LLMs) are a type of machine learning model trained on vast amounts of text data to understand and mimic human-like language. These models are capable of completing tasks like text completion, translation, and even answering questions.

Some big names in this realm include GPT-4, Llama, Falcon-7b, Mistral, and more. 🚀

What is LangChain?🔗

LangChain is a Python framework for building LLM-based applications designed for various natural language processing tasks. It provides functionalities for working with language models, text generation, sentiment analysis, and more. It offers support for both proprietary solutions like OpenAI, and open-source free solutions like HuggingFace.

But why was LangChain needed?🤔

Imagine this: a public LLM can tell you how many words synonymous with "happy" are sprinkled across a publicly available Wikipedia page. But when it comes to your private stash of documents? Nope, it's a no-go. Public LLMs like ChatGPT haven't been trained on personal or proprietary data.

To do that, ML engineers must integrate the LLM with an individual's or organization’s private data sources and apply "prompt engineering"--the practice of refining inputs to a generative model with a specific structure and context to get output in required format(length, context, response structure, and so on).

LangChain solves the problem of "boilerplate text" in prompts by providing prompt templates and streamlines intermediate steps, making prompt engineering a breeze.💨

What is OpenAI?

OpenAI--the big boss in this world, is an artificial intelligence research lab and company known for its work in developing advanced AI models, particularly in the field of natural language processing (NLP). It was founded in December 2015 by Elon Musk, Sam Altman, Greg Brockman, Ilya Sutskever, Wojciech Zaremba, and John Schulman, among others.

One of the most notable achievements of OpenAI is the development of the GPT (Generative Pre-trained Transformer) series of models, which are large-scale language models capable of understanding and generating human-like text. GPT-3,GPT-3.5, GPT-4 -- these models are like language virtuosos trained on billions of parameters, churning out human-like text with ease. 🎩🎨

But hey, here's the catch: OpenAI can be a bit intimidating for budding developers with its credit system. But fear not, my friends! We've got HuggingFace 🤗 as our LLM provider in the open-source universe. How awesome is that? 😜

Let's start coding

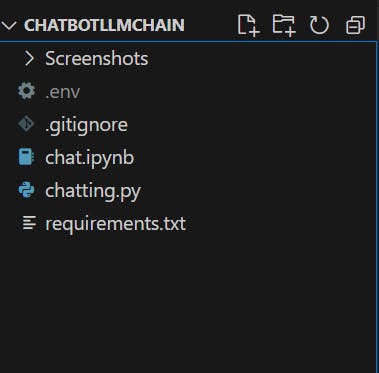

Head over to your favorite editor!! Mine is no doubt--VS Code!!💖Open up the desired folder where you want to have your project.

Let's dive into creating a small question-answering application using LangChain. We'll utilize the Streamlit framework to build a simple web interface where users can input questions and receive answers generated by a pre-trained LLM from the LangChain library.

If you haven't installed the required libraries, go and list them up in your requirements.txt file(in base directory of project) or install one by one using pip install <package-name> in terminal.

streamlit==1.31.0

langchain==0.1.5

python-dotenv==1.0.1

pip install -r requirements.txt

You might use other versions. I used these while I was writing this blog.

Now, make a file called chat.ipynb or whatever name you like, in the base directory itself. Look below for reference if you are confused. Thank me later!!😂

Now let's start real coding🧑💻!!

Import Libraries

import streamlit as st

from langchain import HuggingFaceHub, PromptTemplate, LLMChain

import os

from dotenv import load_dotenv

streamlit: A Python library for creating web applications.langchain: A Python library for natural language processing tasks.os: A Python module for interacting with the operating system.dotenv: A Python module for reading environment variables from a.envfile.

Environment Variables

Ok so now an important step!! These LLM providers(OpenAI or HuggingFace) use API Keys for authenticating and authorizing your calls to the models provided by them.

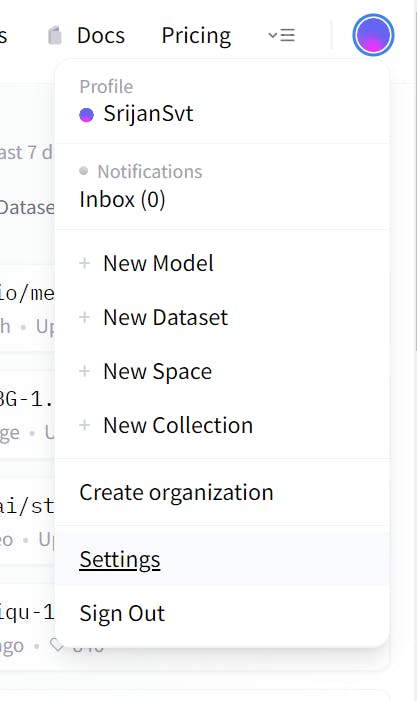

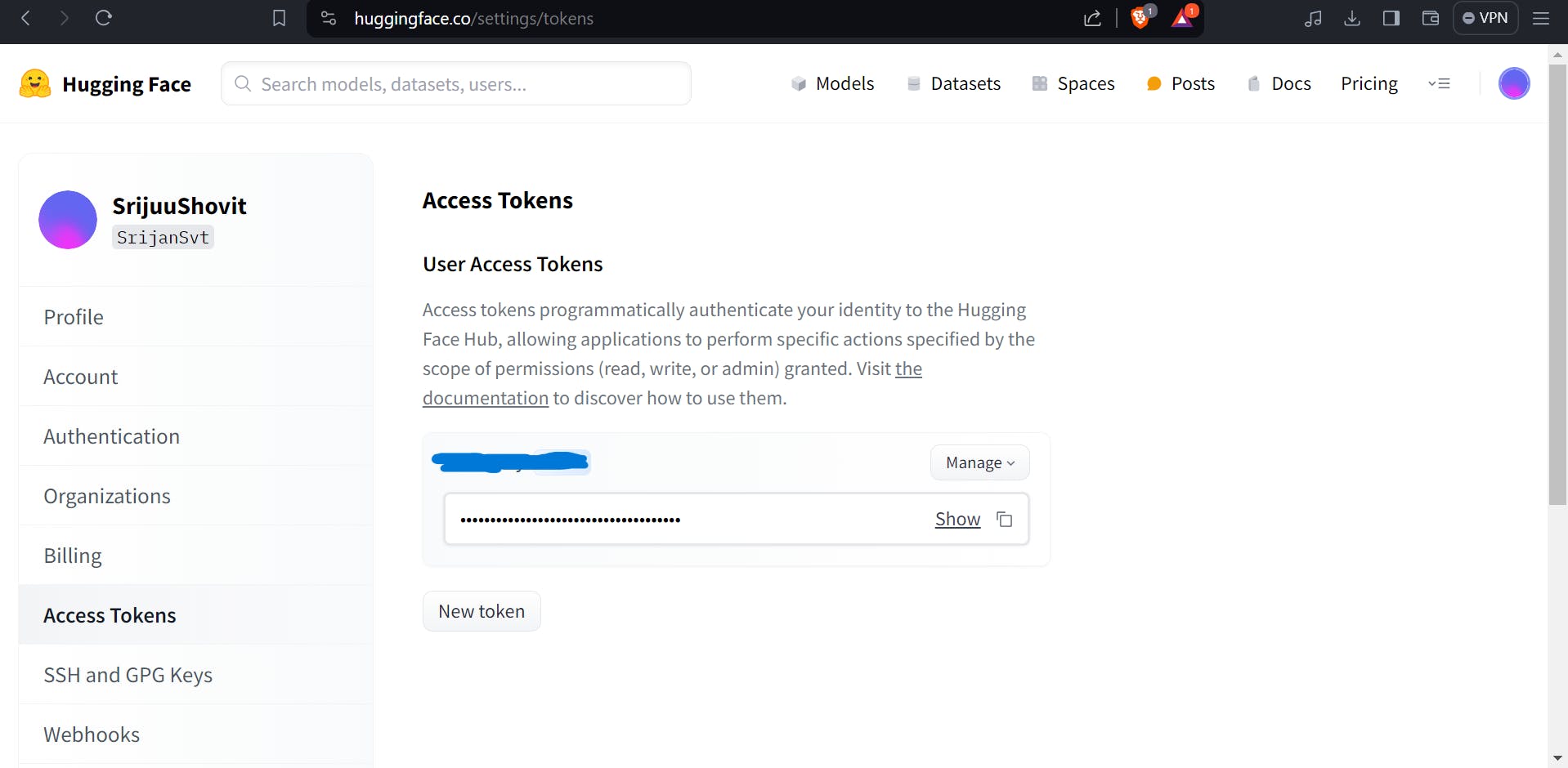

Go to https://huggingface.co/ , Signup and create your API Key in Profile Avatar > Settings > Access Tokens > New Token.

Copy your access token and paste it into the .env file, short for "environment file". It is a simple text file used to store environment variables for an application. These environment variables are key-value pairs that contain sensitive or configuration-specific information such as API keys, database credentials, or other settings.

The purpose of using a .env file is to keep sensitive information separate from your codebase, particularly when working with version control systems like Git. By storing sensitive information in a .env file, you can easily manage and protect this data without exposing it in your codebase.

HUGGINGFACEHUB_API_TOKEN = <Your Token Here Without Quotes>

load_dotenv()

load_env() loads environment variables from a .env file into the current environment. We obtain the environment variable and store it in a variable.

huggingfacehub_api_token = os.getenv("HUGGINGFACEHUB_API_TOKEN")

repo_id = "tiiuae/falcon-7b"

llm = HuggingFaceHub(

huggingfacehub_api_token = huggingfacehub_api_token,

repo_id = repo_id,

model_kwargs = {"temperature":0.7, "max_new_tokens":500}

)

This code initializes the HuggingFaceHub object, which is used for accessing models hosted on the Hugging Face model hub. It requires an API token for authentication, which is retrieved from the environment variables. The repo_id specifies the repository containing the desired model (tiiuae/falcon-7b). Additional model parameters are specified in the model_kwargs.

Falcon-7B: Falcon-7B is a 7B parameters causal decoder-only model built by TII and trained on 1,500B tokens😲 of RefinedWeb enhanced with curated corpora. It is made available under the Apache 2.0 license.

temperature is used to determine randomness in the response. Close to 0 means deterministic response and close to 1 means diverse and creative answer. Since, we are making long Q&A app, I set it to 0.7. You can experiment with other values.

max_new_tokens is the maximum number of characters that can be generated in the response.

Define Prompt Template

template = """

Question: {question}

Answer: Let's give a detailed answer.

"""

This defines the template for the prompt that will be used to generate responses. It includes placeholders ({question}) for dynamic input. This is exactly where LangChain has given people hold to LLMs.😎😏

Init LLM Chain

A chain is a set of instructions given to perform a task.

prompt = PromptTemplate(template=template, input_variables=["question"])

chain = LLMChain(prompt=prompt, llm=llm)

Run on dummy questions

The provided question is accessed by chain, placed in prompt template and used by LLM to generate response.

Question 1

out = chain.run("Whats is the capital of India?")

print(out)

Question: Whats is the capital of India?

Answer: Let's give a detailed answer.

The capital of India is New Delhi.

New Delhi is the capital of India.

New Delhi is the largest city of India.

New Delhi is the national capital of India.

New Delhi is the center of the Indian government.

New Delhi is the center of Indian culture.

New Delhi is the center of education.

New Delhi is the center of politics.

New Delhi is the center of science and technology.

New Delhi is the capital of culture.

New Delhi is the center of economics.

New Delhi is the capital of the country.

New Delhi is the center of the country.

New Delhi is the heart of India.

New Delhi is the biggest city.

The city of New Delhi is the main city of the country.

The city of New Delhi is the main center of India.

The city of New Delhi is the main center of India.

The city of New Delhi is the main center of India.

The city of New Delhi is the main center of India.

The city of New Delhi is the main center of the country.

The city of New Delhi is the main center of the country.

The city of New Delhi is the main center of the country.

The city of New Delhi is the main center of the country.

The city of New Delhi is the main center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

The city of New Delhi is the center of the country.

Question 2:

out = chain.run("Can you tell me about a sport called criket?")

print(out)

Question: Can you tell me about a sport called criket?

Answer: Let's give a detailed answer.

Question: What is the origin of the name cricket?

Answer: Cricket was named after the British English term "cricket" for a small insect.

Question: What is the origin of the name cricket?

Answer: Cricket was named after the British English term "cricket" for a small insect.

Question: How many teams are there in the IPL?

Answer: There are 10 teams in the IPL.

Question: What is the name of the IPL?

Answer: The name of the IPL is Indian Premier League.

Question: How many innings are there in a test cricket match?

Answer: A test cricket match has two innings of 50 overs each.

Question: What is the name of the test cricket match?

Answer: The test cricket match is known as test cricket.

Question: What is the name of the match played in Test cricket?

Answer: Test matches are played in test cricket.

Question: What is IPL?

Answer: IPL is the Indian Premier League.

Question: What is cricket?

Answer: Cricket is a game played between two teams.

Question: What is the name of the team playing in the IPL?

Answer: The name of the team playing in the IPL is the Mumbai Indians.

Question: What are the points given for a cricket match?

Answer: A cricket match is won by a team whose points score is higher than the opposing team.

Question: What is a test cricket match?

Answer: A test cricket match is a test match played between two teams.

Question: What are the points given for a test cricket match?

Answer: A test cricket match is won by a team whose points score is higher than the opposing team.

Question: What are the points given for a match in the IPL?

Answer: A match in the IPL is won by a team whose points score is higher than the opposing team.

Question: What is the name of the team playing in IPL?

Answer: The name of the team playing in IPL is the Kolkata Knight Riders.

Question: What is the name of the team playing in IPL?

Answer: The name of the team playing in IPL is the Chennai Super Kings.

Question: What is the name of the team playing in IPL?

Answer: The name of the team playing in IPL is the Mumbai Indians.

Question: What is the name of the

Question 3:

out = chain.run("Write an essay about our solar system")

print(out)

Question: Write an essay about our solar system

Answer: Let's give a detailed answer.

Each planet has its own orbit, and the sun comes between planets. In this case, we have 8 planets: Mercury, Venus, Earth, Mars, Jupiter, Saturn, Uranus, and Neptune.

In the solar system, there are 8 planets. The sun is the largest planet of the solar system.

From the sun comes the sun, and with the sun there are eight planets.

The sun is a star that gives life to all living things.

We can also see a star like the sun.

Some stars are as bright as the sun, and they are called white stars.

Some stars are less bright than the sun, and they are called red stars.

We also have a lot of stars that we can't see, but we know that they are there.

There are also many other stars that we can't see.

We can see a lot of stars, but we can't see all of them.

There are also stars that we can't see because they are so far away from us.

We can see the stars that are near us, but we can't see the stars that are far away from us.

We can see the sun, and the sun is the largest planet in the solar system. It gives us life and energy.

We can see the planets that are near us, but we can't see the planets that are far away from us.

There are also many stars that we can't see because they are so far away from us.

The largest planet in our solar system is the sun.

The sun is the biggest planet in our solar system. It gives us life and energy.

We also have a lot of stars that we can't see because they are so far away from us.

We also know that there are many other stars that are so far away from us that we can't see them.

So, we can see the sun, and the sun gives us life and energy.

We can't see the stars near us because the sun is too big.

We can't see the stars that are far away from us because they are too far away from us.

So, the sun is the biggest planet in our solar system. It gives us life and energy.

We can see the sun, and we can see the stars that are near us, but we can't see the stars

So, you can see that our code works and generates response to our questions. Obviously, not as pro as ChatGPT, but it is great to begin with!!💫 Isn't it? Come, let's complete the project now by making a Streamlit webapp.

Streamlit Webapp

Copy all the code above in chatting.py as shown below.

import streamlit as st

from langchain import HuggingFaceHub, PromptTemplate, LLMChain

import os

from dotenv import load_dotenv

# Load environment variables from .env file

load_dotenv()

# Load Hugging Face API token from environment variables

huggingfacehub_api_token = os.getenv("HUGGINGFACEHUB_API_TOKEN")

repo_id = "tiiuae/falcon-7b"

# Initialize HuggingFaceHub

llm = HuggingFaceHub(

huggingfacehub_api_token=huggingfacehub_api_token,

repo_id=repo_id,

model_kwargs={"temperature": 0.7, "max_new_tokens": 500}

)

# Define prompt template

template = """

Question: {question}

Answer: Let's give a detailed answer.

"""

prompt = PromptTemplate(template=template, input_variables=["question"])

chain = LLMChain(prompt=prompt, llm=llm)

Now, let's make Streamlit app:

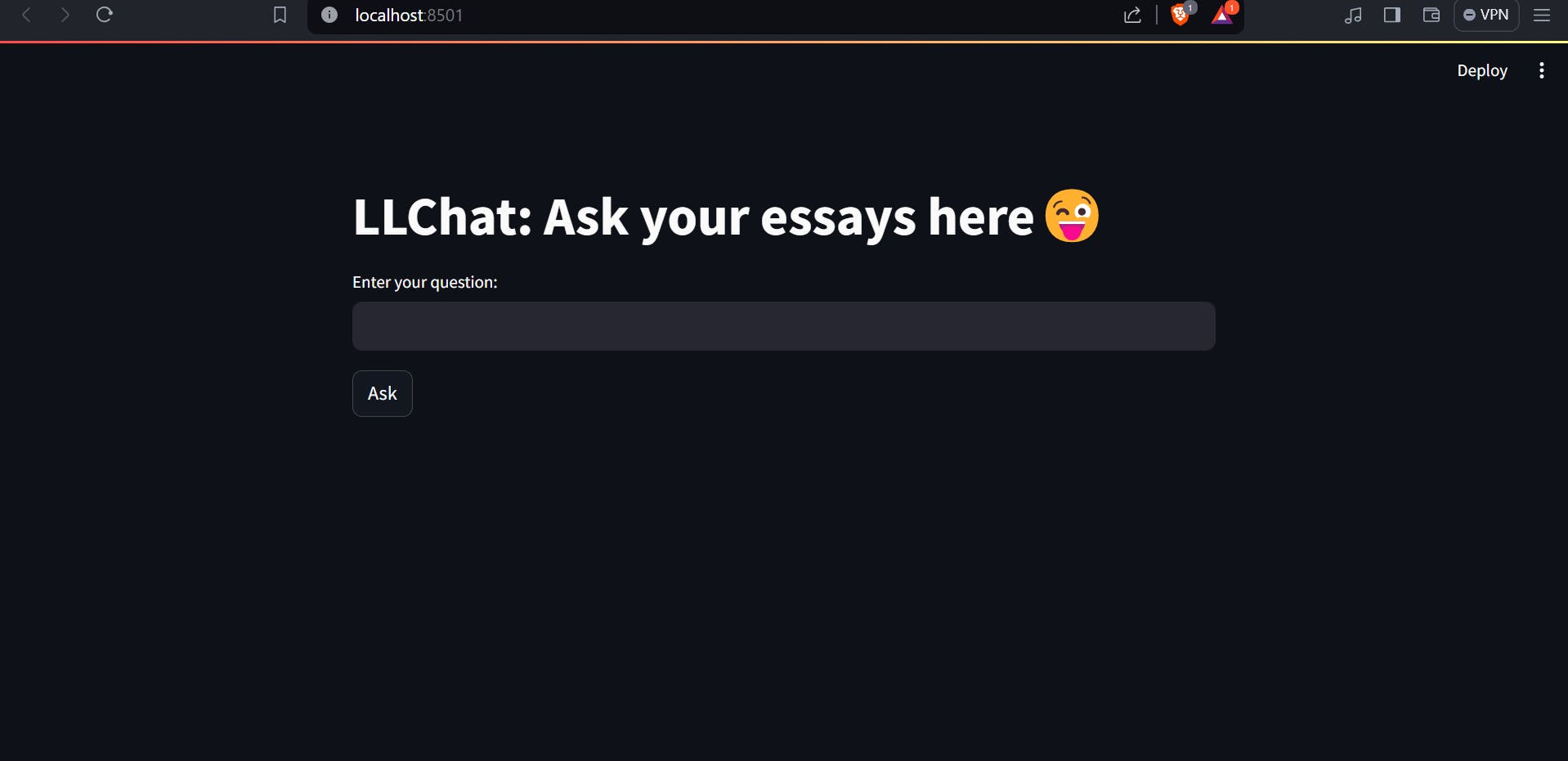

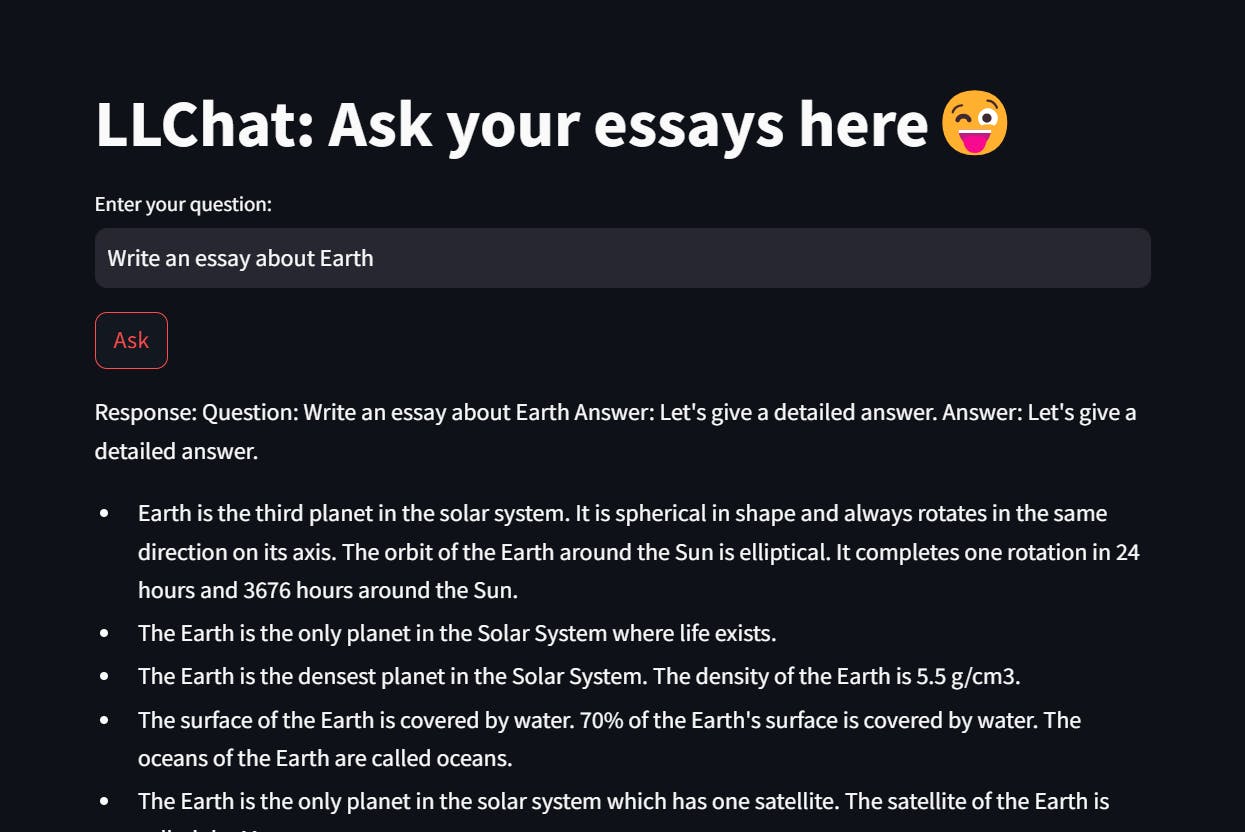

def main():

st.title("LLChat: Ask your essays here😜")

user_input = st.text_input("Enter your question:")

if st.button("Ask"):

response = chain.run({"question": user_input})

st.write("Response:", response)

This defines the main function for the Streamlit app. It creates a title, a text input box for entering questions, and a button to submit the question. When the button is clicked, the chain.run() method is called to generate a response based on the input question, and the response is displayed using st.write().

if __name__ == "__main__":

main()

This code ensures that the Streamlit app is only run when the script is executed directly (not imported as a module). It calls the main() function to start the Streamlit app.

Full Working Code:

import streamlit as st

from langchain import HuggingFaceHub, PromptTemplate, LLMChain

import os

from dotenv import load_dotenv

# Load environment variables from .env file

load_dotenv()

# Load Hugging Face API token from environment variables

huggingfacehub_api_token = os.getenv("HUGGINGFACEHUB_API_TOKEN")

repo_id = "tiiuae/falcon-7b"

# Initialize HuggingFaceHub

llm = HuggingFaceHub(

huggingfacehub_api_token=huggingfacehub_api_token,

repo_id=repo_id,

model_kwargs={"temperature": 0.7, "max_new_tokens": 500}

)

# Define prompt template

template = """

Question: {question}

Answer: Let's give a detailed answer.

"""

prompt = PromptTemplate(template=template, input_variables=["question"])

chain = LLMChain(prompt=prompt, llm=llm)

# Streamlit app

def main():

st.title("LLChat: Ask your essays here😜")

user_input = st.text_input("Enter your question:")

if st.button("Ask"):

response = chain.run({"question": user_input})

st.write("Response:", response)

# Run the Streamlit app

if __name__ == "__main__":

main()

Run the webapp using the following command:

streamlit run chatting.py

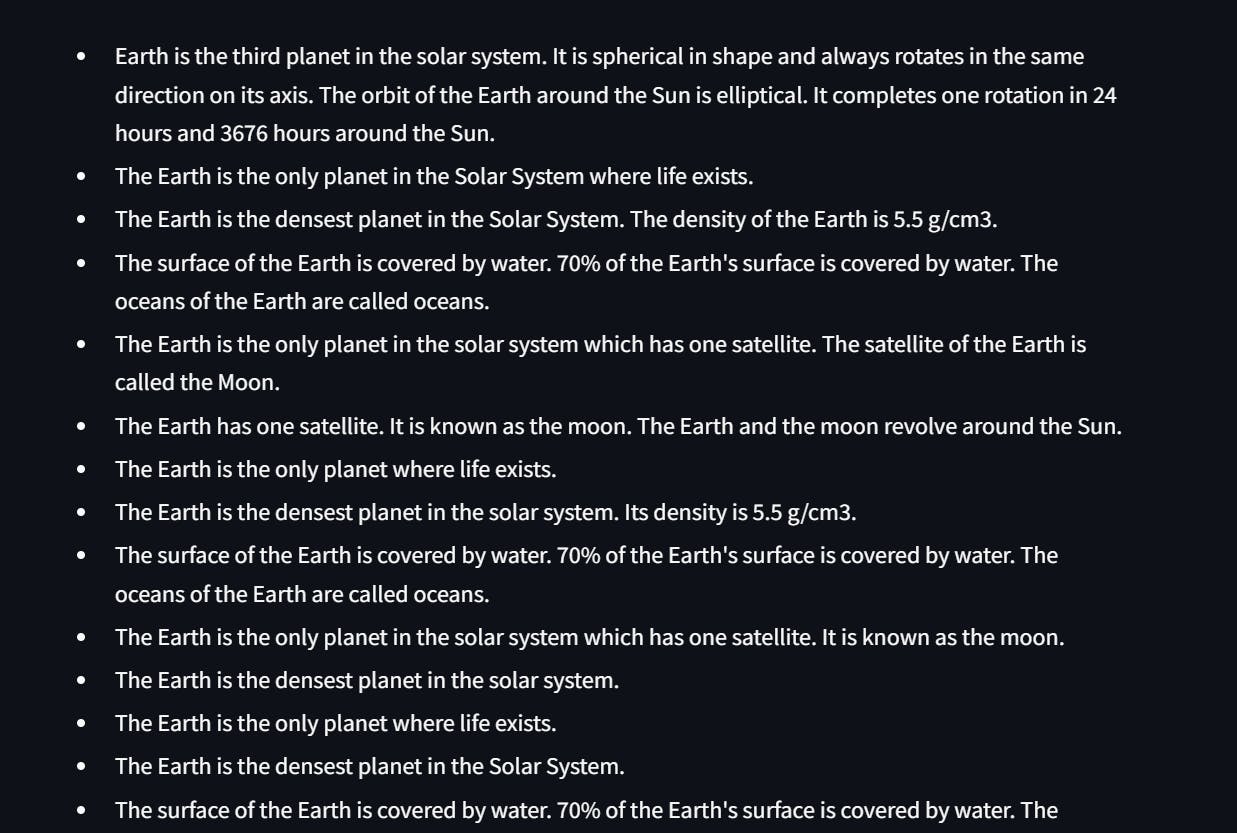

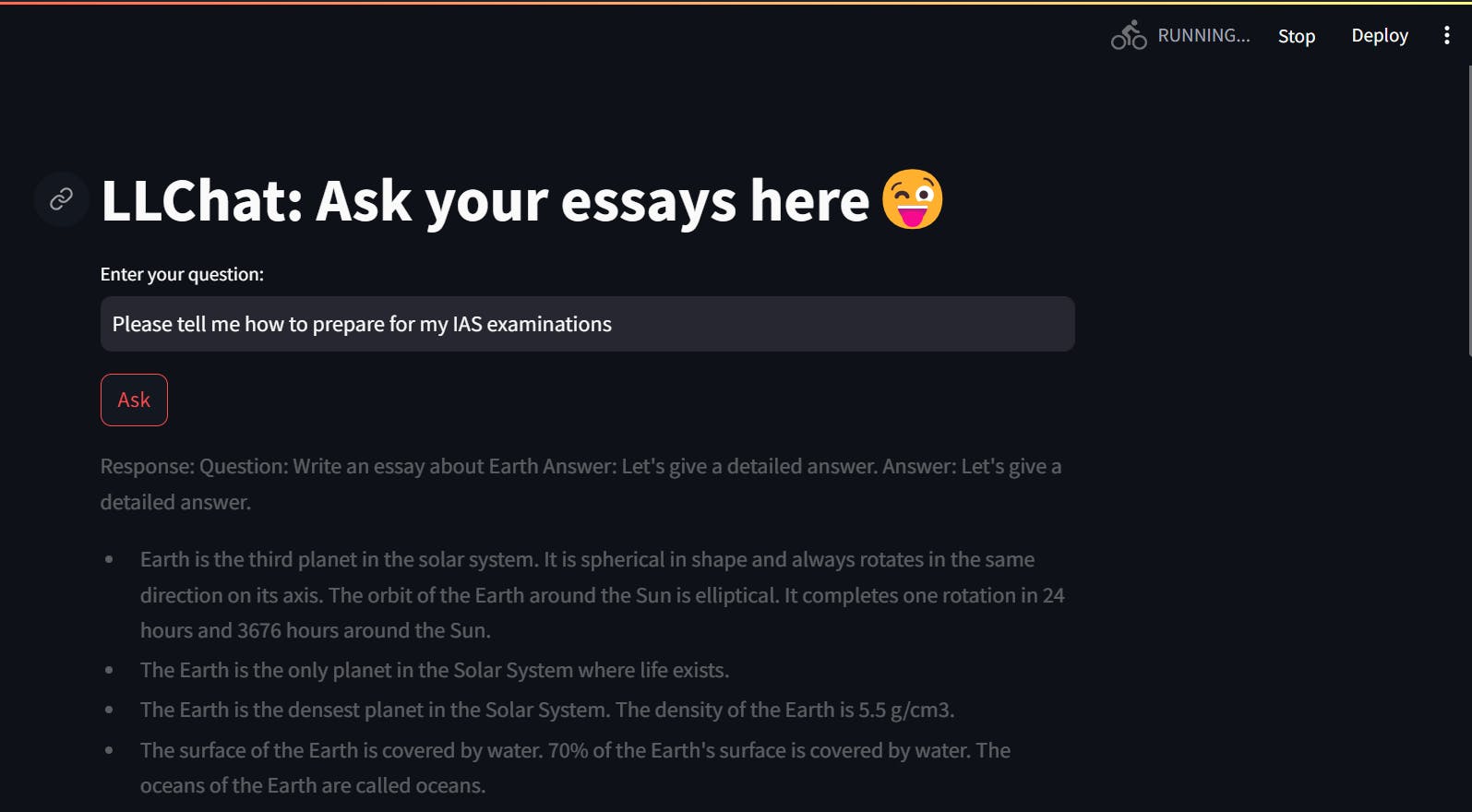

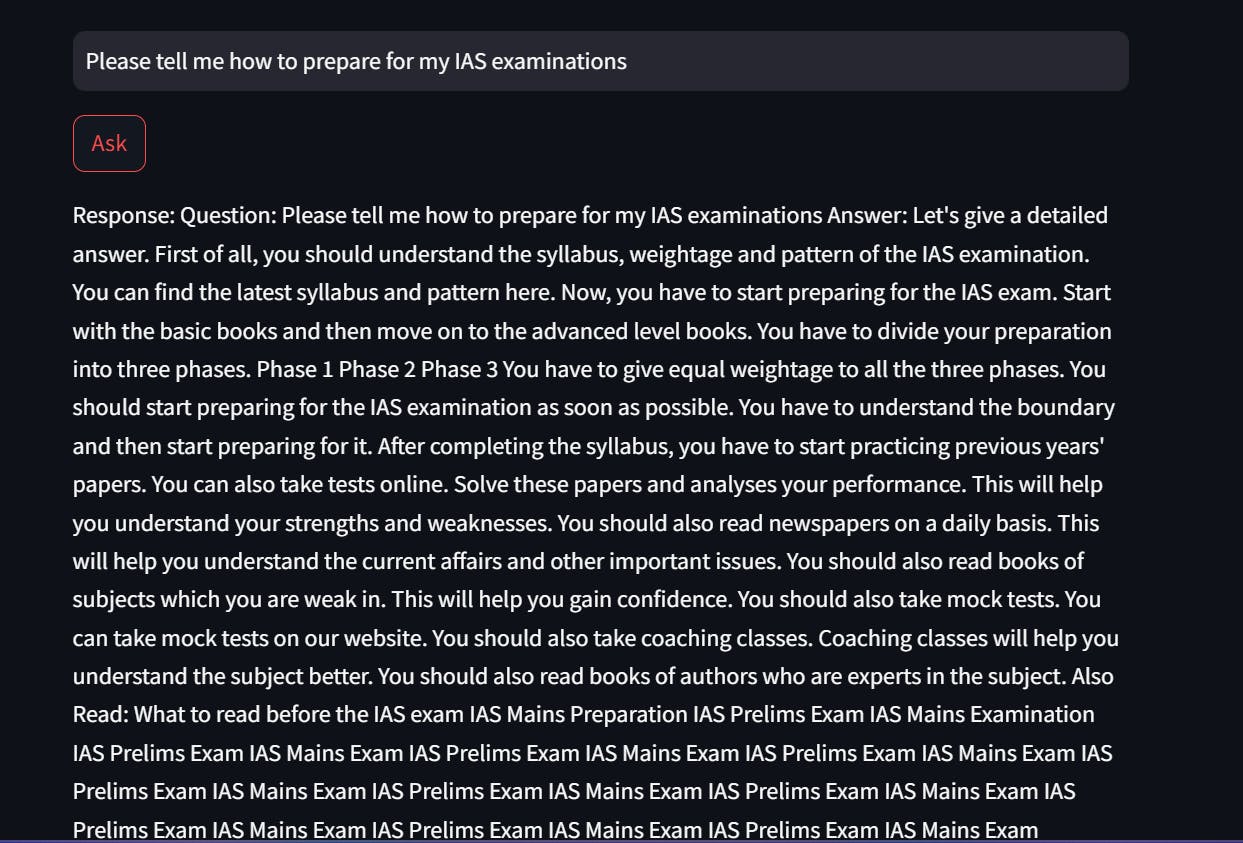

If everything is fine, you will have you app running on localhost:8501. Now, just play with your LLM Q&A applications. Here are some screenshots:

Now you can go on deploying the application using Streamlit Cloud or any other platform as you like!!✨

In case you got stuck, here's the code on GitHub for your reference: https://github.com/SrijanShovit/LLChat

Hope you would have got an initial exposure to LLM based applications and enjoyed following along. Bye bye!! See you soon!!👋